Theses

Generation and Relocalization of Parking Maps

C++, ROS2 | Porsche Engineering GmbH | Mönsheim, DE

Design, Simulation and Implementation of an optimal Adaptive Filter

MATLAB, Verilog | Visvesvaraya Technological University | Bengaluru, IN

Projects

Disaster Response Robots | C++, ROS1

Grundlagen der Elektrotechnik Lab | Paderborn, DE

The use of autonomous robots in times of natural disasters can be of great help. The robot must be capable of performing multiple actions - behavioral control, object recognition and handling, exploration, and mapping.

The mapping system plays a vital role in improving the localization issue. As part of the project, we had to reconstruct the LOAM pipeline using SLAM concepts, where I had to extend the implementation of Loop Closure using a LiDAR scanner and camera data to fix the localization problem with the help of various 2D and 3D Key point extraction and descriptor algorithms using cross-platform library functions such as OpenCV.

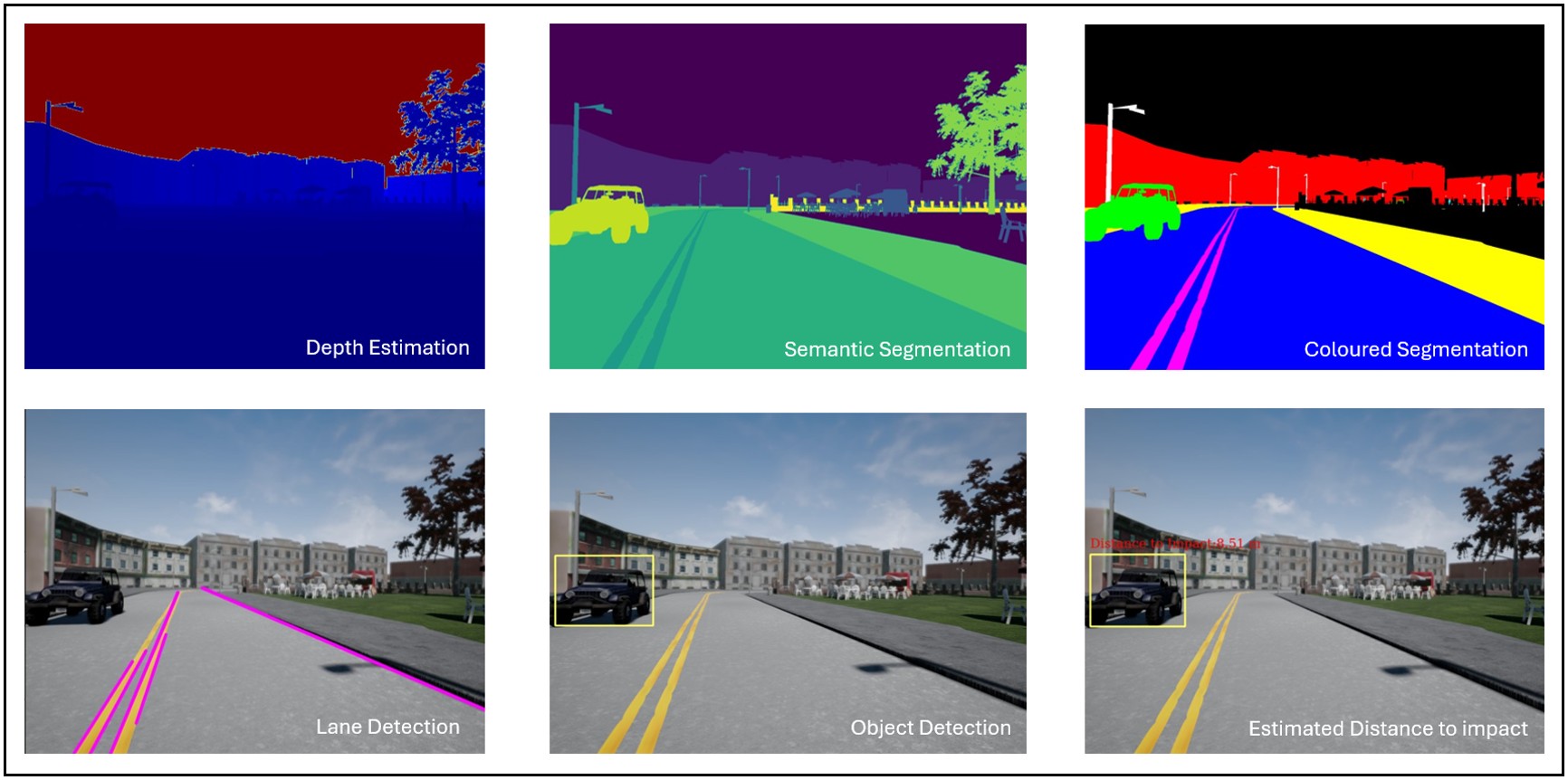

Autonomous Vehicle Perception | Python, Computer Vision

Independent Development Projects | Leonberg, DE

Developed a perception pipeline for self-driving cars using Python, leveraging OpenCV, NumPy, and custom perception algorithms. The system processes a pre-recorded dataset containing RGB images, depth maps, semantic segmentation, and object detection data. It performs key perception tasks such as drivable space estimation using RANSAC, lane line detection, and object detection with distance estimation. The pipeline integrates multiple vision-based techniques to enhance environmental awareness, enabling the identification of drivable areas and obstacles. This project demonstrates a comprehensive approach to autonomous vehicle perception, crucial for safe navigation.

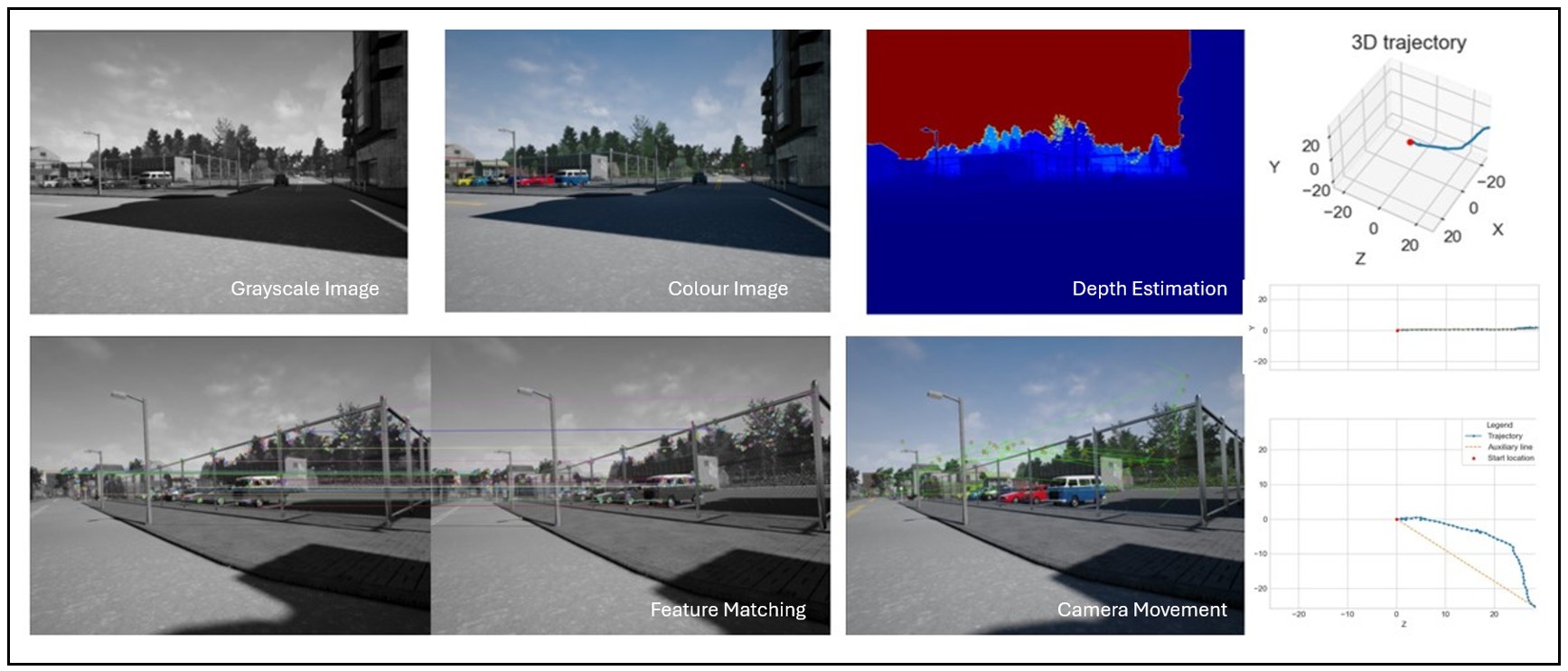

Visual Odometry for Camera Motion Estimation | Python, Pose Estimation

Independent Development Projects | Leonberg, DE

Implemented a visual odometry pipeline using feature extraction and matching techniques to estimate camera motion from sequential images. The system utilizes ORB features, FLANN matching, and Essential Matrix Decomposition (or PnP with RANSAC) to track movement and reconstruct the camera trajectory. The project integrates dataset handling, feature visualization, and trajectory estimation for real-time motion analysis. Outputs include matched feature visualizations and 3D camera trajectory plots. This implementation serves as a foundation for further enhancements, such as advanced feature descriptors, bundle adjustment, and loop closure detection for improved accuracy.

Automotive Radar Perception Pipeline | ROS2, CAN, Object Tracking

Independent Development Projects | Leonberg, DE

Implemented a modular radar perception pipeline based on the Conti ARS408 radar sensor within the ROS 2 framework. The project centered around a ROS 2 lifecycle node that subscribed to real-time CAN bus messages, decoded raw radar frames, and generated dynamic object tracks with rich metadata including position, velocity, orientation, and object classification. Lifecycle states were leveraged for deterministic startup, shutdown, and fault handling, ensuring system reliability. The node published object lists, TF transforms, and visualization markers in RViz, enabling seamless integration into autonomous systems. Core components included low-level bitfield parsing, temporal object state management, and 3D visualization of ego-vehicle and target objects. The implementation demonstrates advanced skills in real-time sensor fusion, middleware architecture, and embedded robotics perception.

Monocular Depth Estimation with Transformers | Python, Computer Vision

Independent Development Projects | Leonberg, DE

Developed an interactive depth estimation pipeline using the transformers library and the Depth-Anything V2 model. The system supports image input from both local files and web URLs and generates predicted depth maps using a state-of-the-art transformer-based architecture. Leveraged Hugging Face’s pipeline abstraction for model loading and inference, with GPU acceleration when available. The application includes robust user input handling, image preprocessing, and result visualization using Matplotlib. This project demonstrates core skills in applying deep learning models to visual perception tasks, with potential applications in augmented reality, robotics, and autonomous systems—where depth estimation is critical for navigation, object interaction, and spatial understanding.

YOLO Models and Real-Time Detection Transformer | DNN, Object Detection

Independent Development Projects | Leonberg, DE

Performed a comprehensive evaluation of modern object detection architectures, including YOLOv8, YOLOv10, YOLO11, YOLO12, and RT-DETRv2, to analyze their suitability across a range of deployment scenarios. The project explored a spectrum of design approaches—from lightweight, CNN-based models to advanced transformer-integrated frameworks—each optimized for varying trade-offs between speed, accuracy, and hardware efficiency. Key innovations examined included open-vocabulary detection, box-free training, and end-to-end DETR-style pipelines. Developed and optimized custom benchmarking tools using PyTorch, TorchVision, and CUDA to measure real-time performance across representative datasets. Findings offer practical guidance for selecting detection models in robotics, autonomous driving, healthcare imaging, and retail analytics. This project demonstrates advanced proficiency in applied deep learning, model benchmarking, and real-time computer vision systems.

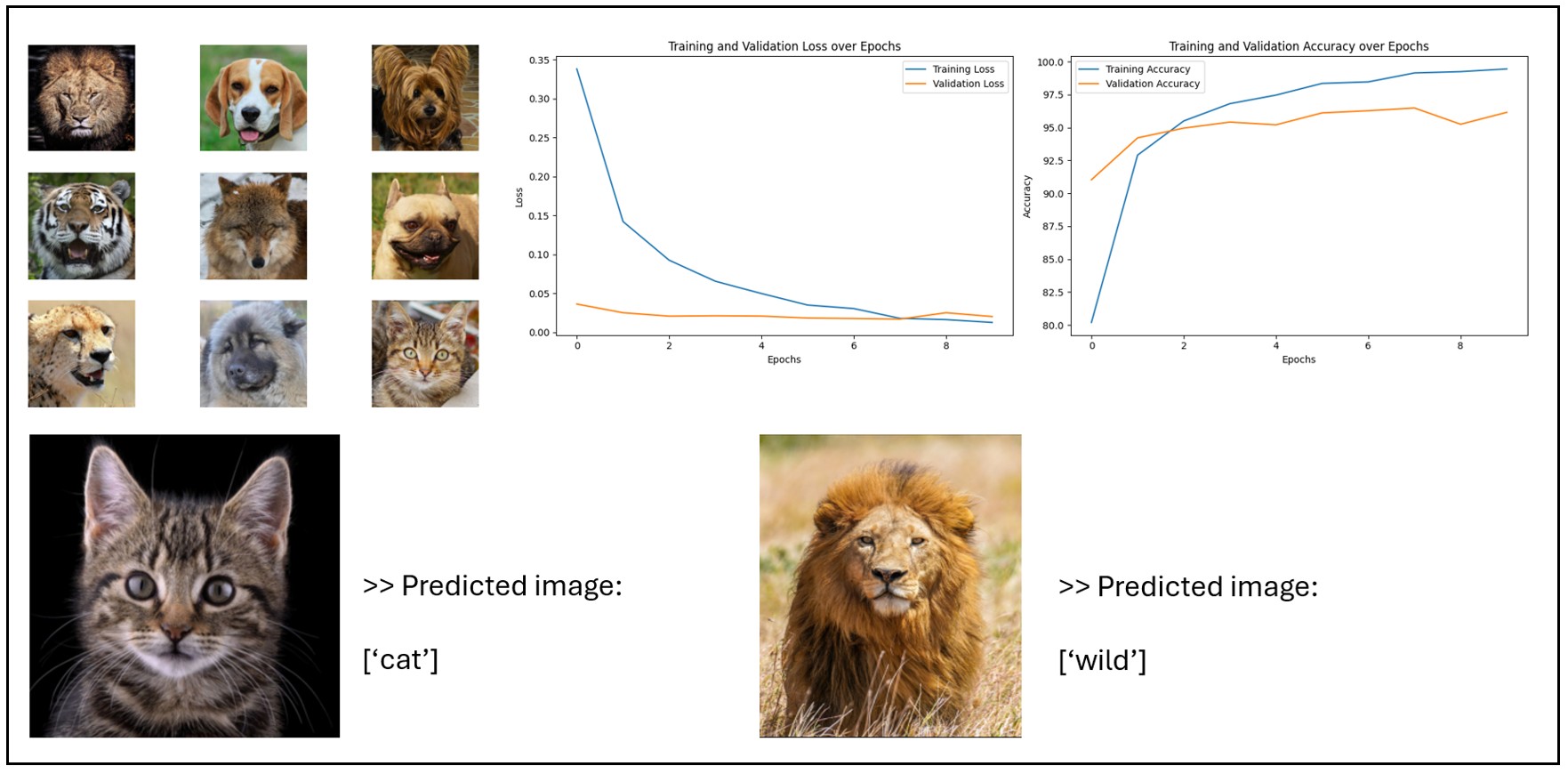

Deep Learning-Based Image Classification with CNN | Deep Learning, PyTorch

Independent Development Projects | Leonberg, DE

Developed a deep learning pipeline for image classification using a Convolutional Neural Network (CNN) in PyTorch. The system automatically downloads and preprocesses datasets from Kaggle, applies transformations, and utilizes a custom dataset loader. The CNN model consists of convolutional layers with ReLU activation and max pooling, trained with cross-entropy loss and Adam optimizer. The project tracks training progress, evaluates model accuracy on test data, and supports inference on new images. Future enhancements include data augmentation, transfer learning with pre-trained models, and hyperparameter tuning for improved performance.

Design and Implementation of a DTMF based home automation system

Embedded C/C++ | Visvesvaraya Technological University | Bengaluru, IN

Controlling your home appliance from anywhere is most desirable if you are a person occupied with a lot of day to day tasks at your work place. In this project I designed a home automation system that can be controlled from any of your mobile phones using a technology used since the beginning of telephones - DTMF tones.

Line Follower Robot Using AVR Microcontroller Development Board

Embedded C/C++ | Visvesvaraya Technological University | Bengaluru, IN

Line follower is an Autonomous Robot which follows either black line in white or white line in black area. Robot must be able to detect particular line and keep following it. Line-following robots are very popular for its accurate line detection algorithms with analog outputs of reflective optical sensors and also home-made encoders, which help record all information about the racing track.